The introduction of visual supports can often augment spoken language comprehension for learners who are minimally verbal. For example, when directing a learner to put a box into a bag, a symbolate sentence strip of “box in bag” (see Figure 1) could improve understanding assuming the learner understands the concept “in,” as well as its representation by the graphic symbol of the dot, arrow, and box. Furthermore, to successfully complete this direction presented in visual form, the learner must recognize that word order conveys which noun is the container (i.e., the bag) and which is the object to be put in (i.e., the box) (Allen et al., 2017). Many learners with moderate to severe autism who are minimally verbal have difficulty grasping these relationships. The interpretation difficulty mainly stems from a dependence on routines and context, since processing the linguistic content and structure is inherently challenging. To improve a learner’s interpretive challenges, an alternative support known as a scene cue (SC) can be applied to visually represent language input in a more concrete way (Shane & Weiss-Kapp, 2008).

Figure 1. A symbolate sentence strip of “box in bag”

An SC takes the form of either a photograph (static SC) or a short video clip (dynamic SC) which a mentor shows to a learner on a mobile device, smartwatch (O’Brien et al., 2020), or in paper form at the moment it is needed. For example, to communicate “put the box into the bag,” the mentor would speak that direction while simultaneously presenting a short video clip of a hand placing a box into a bag (dynamic SC) or a photo of a hand grasping an object as if starting to insert it into the bag (static SC). Though there are commonalities with video modeling, SCs are a type of media rather than an intervention approach; also, in contrast to video modeling, SCs do not always include a person (Schlosser et al., 2013). For instance, a play-based direction such as “make Woody eat the apple” would consist of a Woody figurine holding an apple to his mouth (Choe et al., 2020). Research suggests that learners with moderate to severe autism follow directions more accurately when they are presented via speech augmented with SCs than via speech alone (Schlosser et al., 2013; Remner et al., 2016; Allen et al., 2021), or via speech augmented by graphic symbol strips (Allen et al., 2021). Thus, there is considerable evidence showing that the use of SCs can improve direction-following skills.

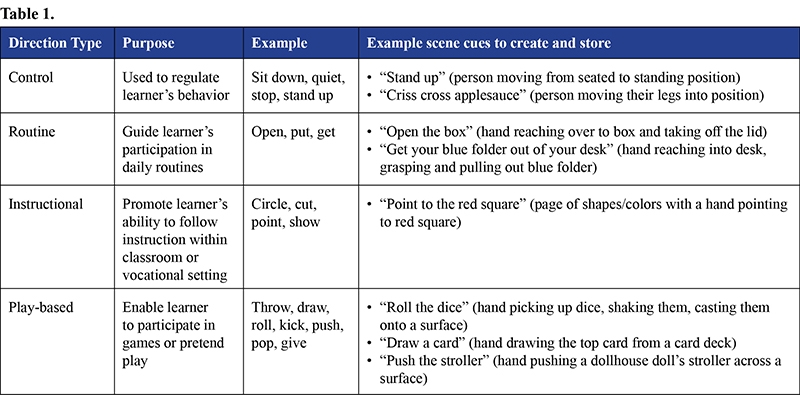

The implications of improved direction-following are best understood by examining the various types of directions that may be given within an academic, home, vocational, or community setting. Common directions can be organized into four basic types (see Table 1 above). Improved following of control directions can result in fewer disruptions and more time spent on-task; improvements in following routine directions can lead to increased independence in daily routines; improved academic and play-based direction-following can yield greater participation in various settings. Across all areas, improved direction-following leads to increased learner independence and reduced frustration for learners as well as mentors.

Consider the example of packing up at the end of the school day. In this case, the mentor says the direction, “Get your backpack.” Because the learner has difficulty following the spoken direction alone, the mentor then points to the cubby where the backpack is kept. Consequently, the learner walks in that direction but does not initiate taking the backpack. The mentor points to the backpack, repeats, “get your backpack,” gestures toward the hook, and makes a pulling motion to represent taking the backpack off the hook. If the learner needs more support, the mentor uses hand-over-hand modeling to help the learner reach up to the hook and remove the backpack. Now consider the same example using an SC. In this case, when the learner does not respond to the spoken direction, the mentor uses an iPad to display a dynamic SC of an arm reaching into a cubby and taking a backpack off a hook, while repeating the spoken direction. Because the SC augments a spoken direction with visual information that is highly concrete, the learner completes the direction with less involvement from the mentor.

Mobile technology and wearable technology (i.e., smartwatch) are effective and convenient conveyers of SCs – both static and dynamic. SCs are simple to create and store using photo and video applications. Folder, album, and/or tagging systems make it easy for mentors to access the SCs associated with a particular activity or setting. For a learner who works in a pizza shop, mentors could make a folder called “Pizza Shop Job” that might contain SCs for directions like “fold the box,” “sweep the floor,” “put the sodas in the fridge.” Other folder examples could be “Getting Ready” (“put your lunch in your backpack,” “put on your coat,” “put on your shoes”), “Gym” (“get the basketball,” “throw the beanbag through the hoop,” “jump onto the box”) or “Princess Dolls” (“make the doll eat/dance/sleep”). Research supports the use of SCs on smartphones, tablets, and even smartwatches, in which case a mentor texts a specific SC to a learner’s smartwatch at the moment it is needed (O’Brien et al., 2020). Depending on the learner’s needs, the SCs presented can be either dynamic or static. Some learners may always require the concreteness of dynamic SCs, while others may be successful with static SCs after some practice. Some may eventually be able to transition from static SCs to symbolate strips; indeed, when graphic symbols are paired with SCs over time, there is potential for learners to map language concepts onto them.

The SC is a tool that harnesses consumer technology to help learners develop direction-following skills and experience more independence with daily activities. Though SCs have many advantages, they are not a generative language – that is, they are not composed of units that can be flexibly combined to make various and predictable meanings, like spoken or written language – but they may help move learners toward generative language by more explicitly representing concepts visually. That, combined with the potential for increasing independence and decreasing frustration, can improve the daily lives of both learners and mentors.

For more information, contact Anna A. Allen, PhD, CCC-SLP at aaa.ccc.slp@gmail.com.

Anna A. Allen, PhD, CCC-SLP

References

Allen, A. A., Schlosser, R. W., Brock, K. L., & Shane, H. C. (2017). The effectiveness of aided augmented input techniques for persons with developmental disabilities: A systematic review. Augmentative and Alternative Communication, 33, 149-159.

Allen, A.A., Shane, H. C., Schlosser, R. W., & Haynes, C. W. (2021). The effect of cue type on direction-following in children with moderate to severe autism spectrum disorder. Augmentative and Alternative Communication, DOI:10.1080/07434618.2021.1930154

Choe, N., Shane, H., Schlosser, R. W., Haynes, C. W., & Allen, A. (2020). Direction-following based on graphic symbol sentences involving an animated verb symbol: An exploratory study. Communication Disorders Quarterly, DOI:10.1177/1525740120976332

O’Brien, A., Schlosser, R. W., Shane, H. C., Wendt, O., Yu, C., Allen, A. A., Cullen, J., Benz, A., & O’Neill, L. (2020). Providing visual supports via a smart watch to a student with autism spectrum disorder: An intervention note. Augmentative and Alternative Communication, 36, 249-257.

Remner, R., Baker, M., Karter, C., Kearns, K., & Shane, H. C. (2016). Use of augmented input to improve understanding of spoken directions by children with moderate to severe autism spectrum disorder. eHEARSAY, Journal of the Ohio Speech Language Hearing Association, 6(3), 4-10.

Schlosser, R. W., Laubscher, E., Sorce, J., Koul, R., Flynn, S., Hotz, L., Abramson, J., Fadie, H., & Shane, H. (2013). Implementing directions that involve prepositions with children with autism: A comparison of spoken cues with two types of augmented input. Augmentative and Alternative Communication, 29, 132-145.

Shane, H. C., & Weiss-Kapp, S. (2008). Visual language in autism. San Diego: Plural Publishing.